LLMs are restricted by complexity

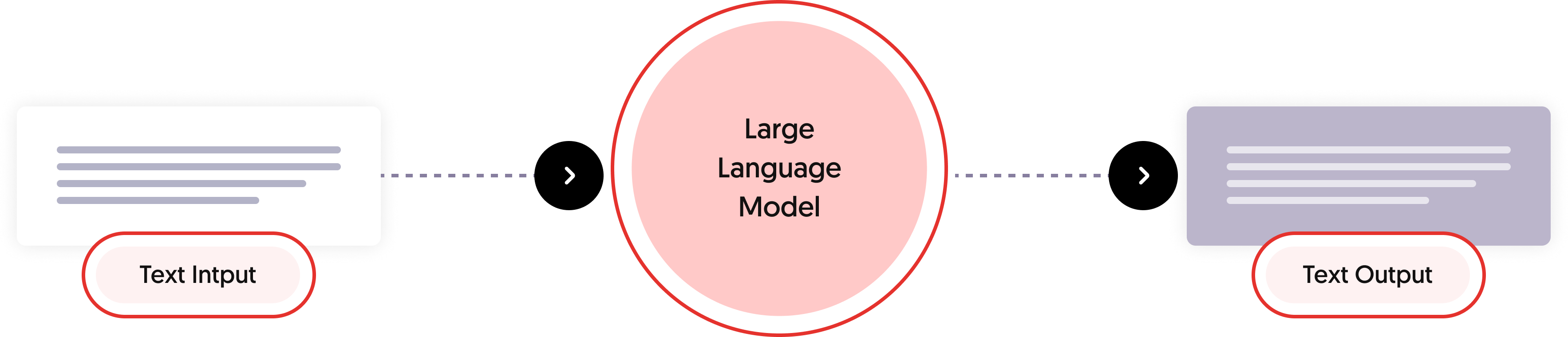

LLMs can only receive and return a certain amount of data (so-called tokens). Tokens are text units that represents words, phrases, or other piece of text. Token limits refer to the maximum number of tokens that the model can process at once.

The amount of data sent to a model impacts its performance. Therefore, APIs to access LLMS usually charge based on the number of tokens sent to the API.

Examples of token limits of well-knows LLMs are:

- GPT-3.5 Turbo: 4096 tokens

- GPT-4: 8192 tokens

- GPT-4 32k: 32,768 tokens

For complex conversations it can be necessary to break down the conversation into multipel sub-conversations.

A good example would be an touristic recommendation chat. In the first part of the conversation the chat assistant would have to find out where a person wants to travel to and when person wants to travel. Once this information is obtained the correct events can be loaded and passed to the next conversation to recommend activities. Using an approach like this can help sending less tokens to the model and thus save computation time and cost.